MEMTABLE

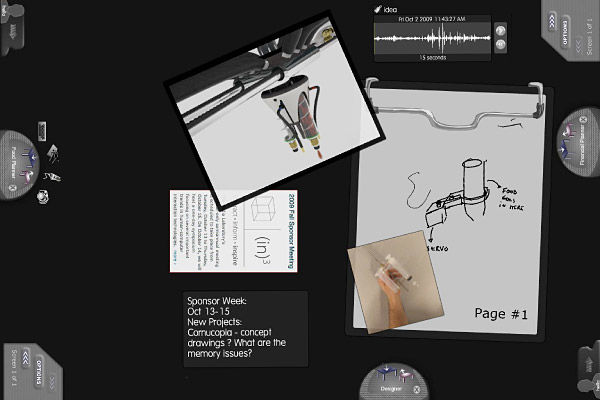

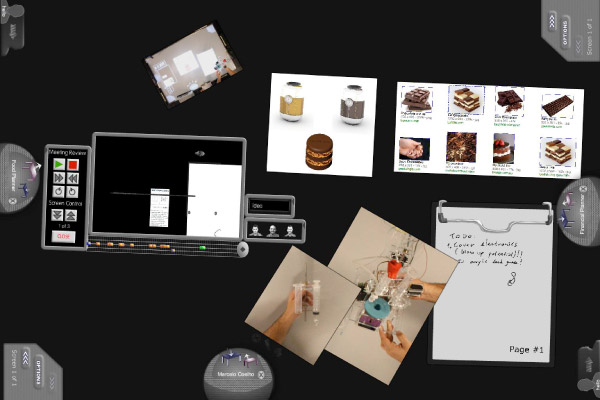

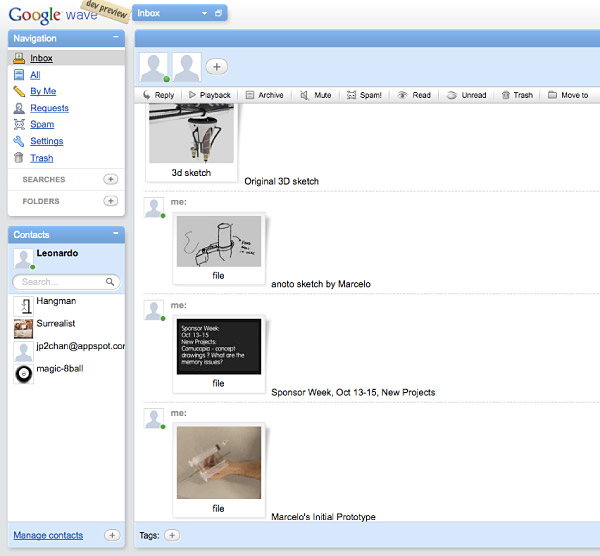

| MemTable is an interactive touch table that supports co-located group meetings by capturing both digital and physical interactions in its memory. Everyone can be a scribe at the MemTable. The goal of the project is to demonstrate hardware and software design principles that integrate recording, recalling, and reflection during the life cycle of a project in one tabletop system. The project has been developing during the last year, and was my masters thesis at the MIT Media Lab in 2009. Memtable poses an important question to HCI designers: How can the multi-person interactions we design be integrated with our workpractices into systems which have history and memory? What is the social computing space of the future? This video demonstrates many of the features of the MemTable Platform. Videos of actual meetings are below for a realistic day to day picture of the table in use. MemTableís hardware design prioritizes ergonomics, social interaction, structural integrity, and streamlined implementation. Its software supports heterogeneous input modalities for a variety of contexts: brainstorming, decision making, event planning, and story-boarding. The user interface introduces personal menus, capture elements, and tagging for search purposes. Users in this meeting were discussing potential themes for a restauraunt in their neighborhood. A Chef, Architect, Designer, and Finacial Advisor each had individual concerns which they could record to the table - its physical design also creates an ergonomic and formal setting for structured collaborative discussions where any person can be a scribe at any time.  Use case example, photo 1: Marcelo and Natan had a meeting to discuss a new prototype of a food printer before demo week. Here is a screenshot of the review panel. Note the 5 different types of input supported by the system: text, image capture, sketching, laptop capture, and audio.  Use case example, photo 2: Marcelo and Natan bring out version 2 of their prototype and sketch improvements together.  Use case example, photo 3: Later, Marcelo is able to go back and see images from their second meeting of the physical prototype, their notes, and sample foods from a web search. On the left is a screenshot of the review pane. Clicking on a user's profile highlights their items on the timeline, and items can be dragged into the current meeting at any time.  Use case example, photo 4: This semester Jenny Chan and I have been building a Google Wave application that syncs all the items from a meeting to a Google Wave of each event. Users can tag and search from Google Wave, as well as review and annotate events from the meeting. This connects the table to the clould and demostrates one way that content from our physical workspaces might be integrated with our digital collaboration tools.  Here is a view of the physical table. I built this in collaboration with Steelcase, thanks to the generous help of Joe Branc and ergonomics experts there. It consists of two projectors, two cameras, and two mirrors. The 8 inch footrest and ability to put your knees under the table are signifigant improvements to existing meeting table designs in the current tabletop research cannon. I worked on this table for about 8 hours a day for 6 months with assistants and other people drawn to sit and work in our space. |