How can we use objects in the real world to control creative content on the screen? VisionPlay is a codebase to extract information from a video and link it realtime to a world on the screen. The framework provides tools to extract, identify, and enhance applications for scenarios of digital puppetry, seeing yourself in the story, and play with objects at a distance.

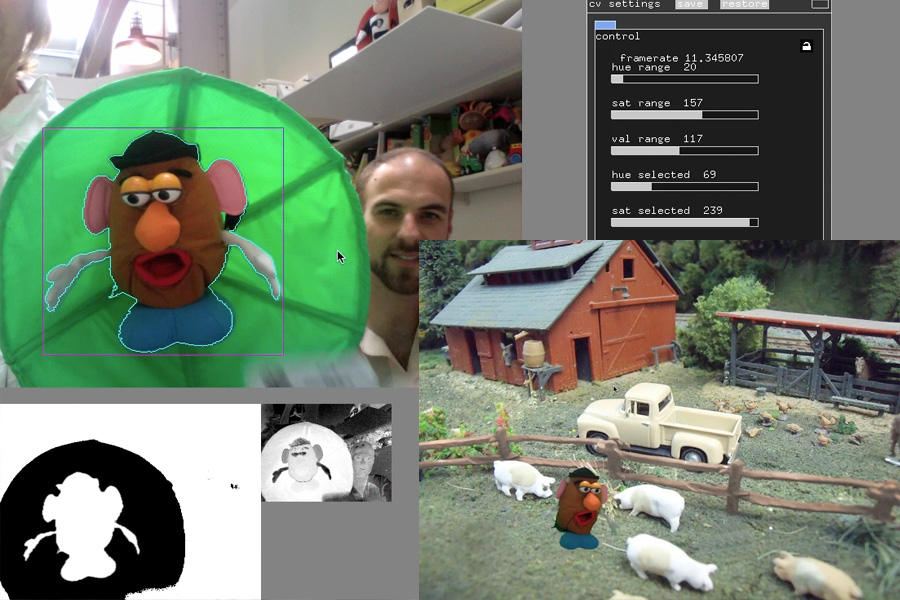

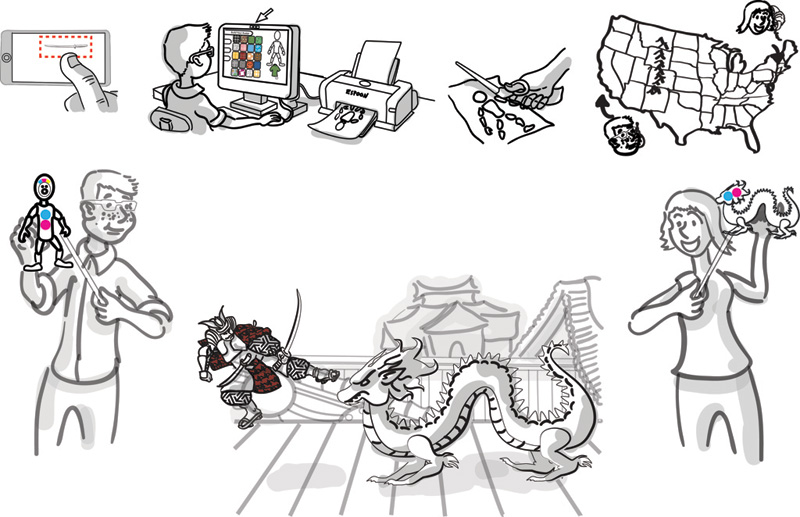

I implemented four examples during my fellowship with Hasbro Toy Company, as examples of ways to use physical objects to create digital content: A green-screen glove that places content in a 2.5d perspective world, a tracking engine for puppets and action figures, a laptop green-screen theatre, and telepresence puppetry platform. They are all grouped here to illustrate the broad creative possibilities for creative improvisation, play, and performance with objects supported by emerging technologies.

I gave a series of performances at Harvard in John Bell's (of Bread and Puppet) puppetry seminar using a greenscreen theatre in which toys advocated to be a part of Children's digital experiences as well as their physical ones.

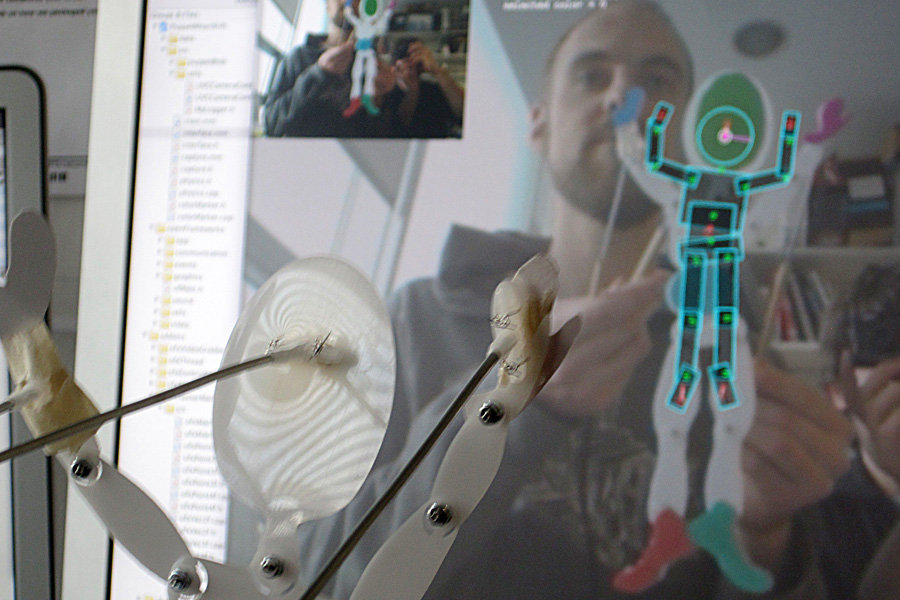

After my time at Hasbro I began working on computer vision algorithms to track toys and puppets without needing a greenscreen. If the form of the puppet more closely correlates to the character on the screen, it enables the user to be more expressive and exercise greater control over digital characters.

Could two people use objects to compose animations at a distance? My vision for this work long term ended up becoming WaaZam - but these early sketches indicated an approach that has informed the work we are doing at present.

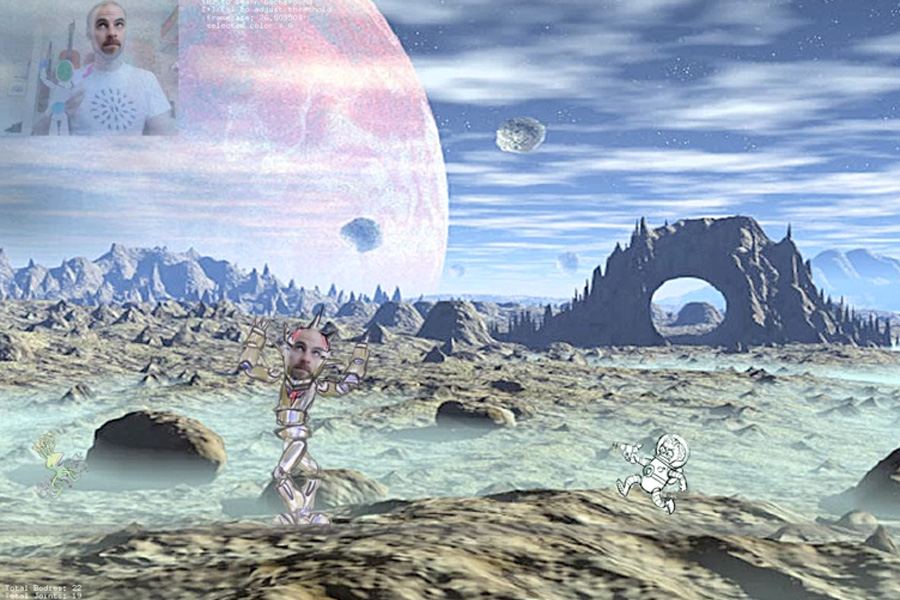

Putting my face on the puppet realtime was interesting but made it difficult to focus on the movments of the character. Someday I'd like to build this so you can act something out first, and then do the face of the character as a second track - a multi-track realtime animation platform.

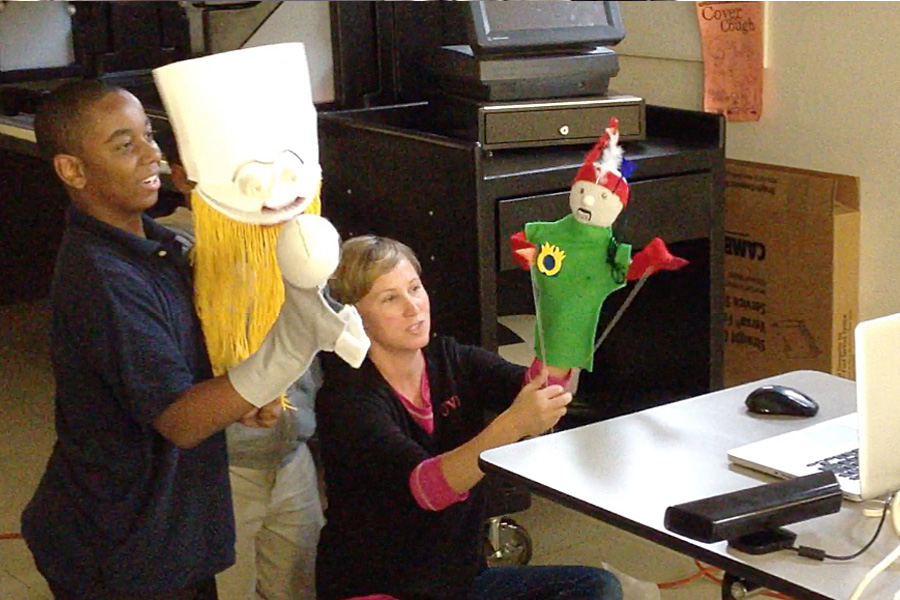

When the kinect came out my first inclination was to use it for the purpose we explored at Hasbro with greenscreens. It works really well for large puppets like the ones shown here. We wrote some simple algorithms to track the hand based on the skeleton and identify the foreground objects but it will be a while before you can get clean segmented edges with depth cameras.